This post is a natural follow up to the review of corruption statistics over the last few weeks, but actually it’s been on my list to explore since I watched John Oliver take on Scientific Studies (full clip here—and I consider it required viewing, please!).

Here are some the main takeaways to consider:

1. Scientific studies are often misrepresented by the media. There are many reasons for that, but mostly it comes down to fear and humor—people are going to be more likely to read a story about chocolate causing/curing cancer than one saying chocolate tastes good. And who wouldn’t click through when they see a story about how "smelling farts might prevent cancer?"

2. Scientific studies are often taken out of context—the fart one certainly was. (Spoiler alert—there is no scientific study saying that smelling fats will prevent cancer.)

3. Scientific studies are often done in a sloppy manner—no controls, too small a study pool, using inappropriate dosages, starting from a bias, or in many other ways. Most of those issues quickly come to light if you look at the actual study, but if you just see the media headline, you are left with the impression that it was a significant study of worth.

4. Scientific studies are not being independently duplicated enough—because as Oliver pointed out, few people care who did it the second time around and there is “no Nobel Prize for fact checking.” The glory is in being the first to find something. (How many of you can name who was the third man on the moon? I’m being generous is assuming you know the second who walked with Neil…)

5. Something that is statistically significant may not actually be significant

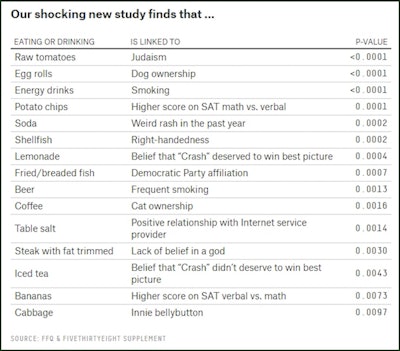

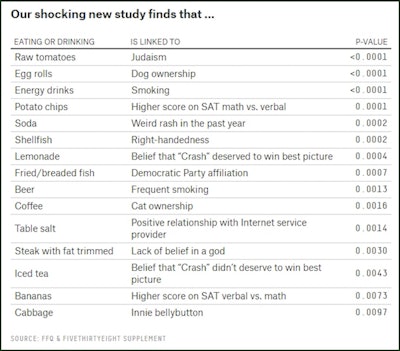

Let’s go into some examples. Last week, I promised you a consideration of cabbage and belly buttons, right? That is an example of “p-hacking,” which is part of number 5 on the list above This is a serious issue, as this blog at the National Institute of Mental Health outlines, or as discussed in these blogs on the Public Library of Science website, one of which go so far as to say that most scientific studies are false. So just what is p-hacking?

Basically it is manipulating statistics. There are many different ways to do it, but the result is that non-significant results become statistically significant or results are deliberately created or developed in a way to give them false significance.

The 538 site has several excellent posts on p-hacking. In this one, they posted a chart showing the results of a survey they did that found, among other things, a statistically significant relationship in their survey between eating egg rolls and owning a dog and the use of salt with feeling good about your internet provider. Here’s the full chart:

That particular post was focused on the challenges of doing meaningful dietary studies and if you’d like to be both grossed out and give up on taking food science seriously ever again, do go to the end of the post and watch their small video on the impossibility to compare, well, apples to apples….

I’ve done many previous posts arguing that we can’t put everything into sound bites. We have to dig into the details. Here’s another example from the site:

…a 2013 study found that people who ate three servings of nuts per week had a nearly 40 percent reduction in mortality risk. If nibbling nuts really cut the risk of dying by 40 percent, it would be revolutionary, but the figure is almost certainly an overstatement, Ioannidis told me. It’s also meaningless without context. Can a 90-year-old get the same benefits as a 60-year-old? How many days or years must you spend eating nuts for the benefits to kick in, and how long does the effect last? These are the questions that people really want answers to. But as our experiment demonstrated, it’s easy to use nutrition surveys to link foods to outcomes, yet it’s difficult to know what these connections mean.

Isn’t that the bottom line? We have to understand how the statistics are related to each other and to our own lives…not everything is meaningful. It’s like the corruption stats from the earlier posts—we need to look at relationships and context to come up with meaningful applications.

The same site posted a very fine article called “Science Isn’t Broken: It’s just a hell of a lot harder than we give it credit for” which concludes most appropriately:

The scientific method is the most rigorous path to knowledge, but it’s also messy and tough. Science deserves respect exactly because it is difficult — not because it gets everything correct on the first try. The uncertainty inherent in science doesn’t mean that we can’t use it to make important policies or decisions. It just means that we should remain cautious and adopt a mindset that’s open to changing course if new data arises. We should make the best decisions we can with the current evidence and take care not to lose sight of its strength and degree of certainty. It’s no accident that every good paper includes the phrase “more study is needed” — there is always more to learn.